Google brings Gemini and other accessibility tools across Android devices

What you need to know

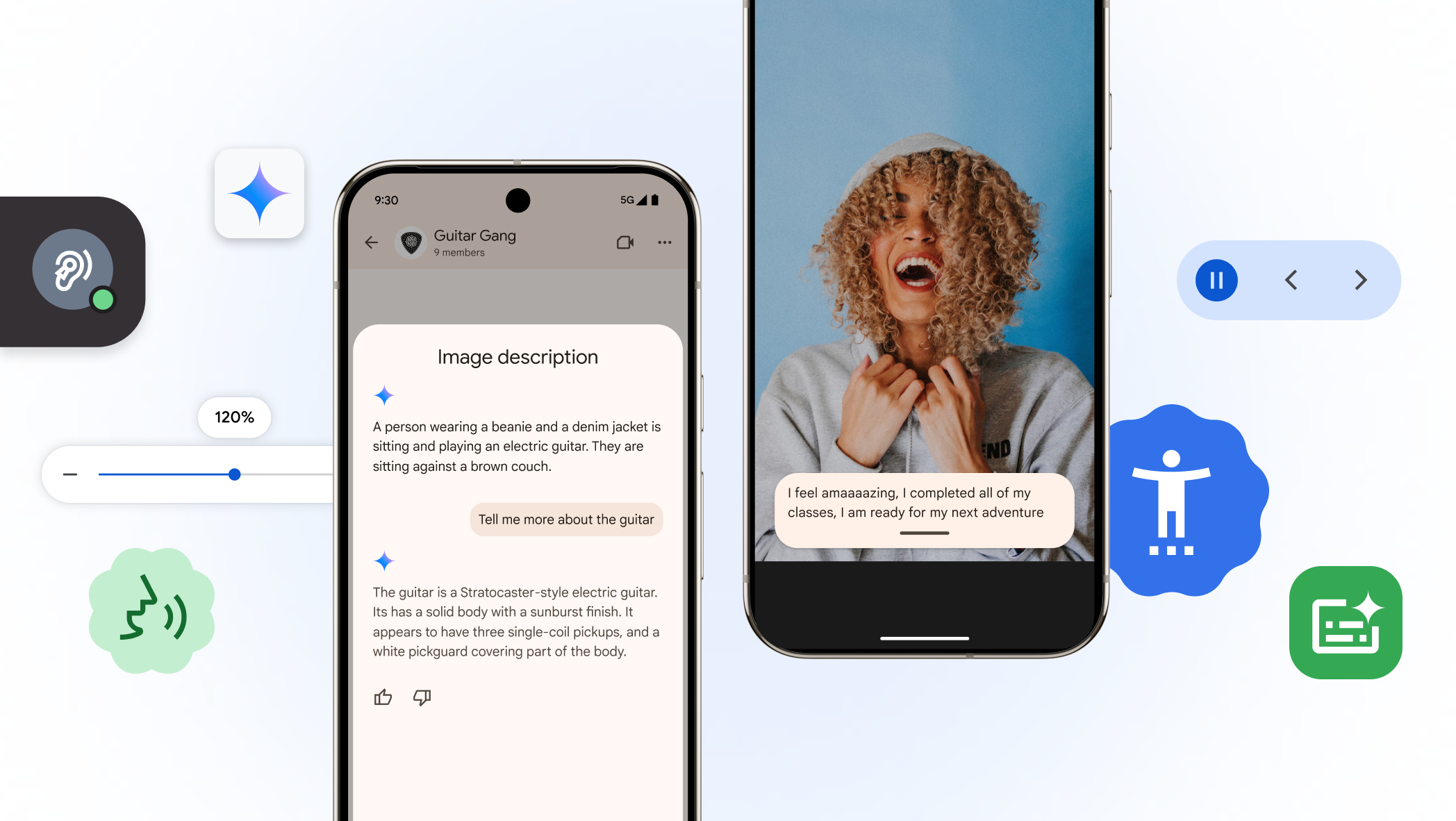

- Google is enhancing Android with AI-powered accessibility features, including improved TalkBack with image and screen questioning.

- New “Expressive Captions” will use AI to provide real-time captions across apps, conveying not just words but also the speaker’s tone and emotions.

- Developers now have access to Google’s open-source Project Euphonia repository to build and personalize speech recognition tools for diverse speech patterns.

- This new version of Expressive Captions is rolling out in English in the U.S., U.K., Canada, and Australia for devices running Android 15 and above.

Each year, Google announces a slew a features for Global Accessibility Awareness Day (GAAD) and this year isn’t any different. Today (May 15), Google announced that it is rolling out new updates to its products across Android and Chrome, and adding new resources for developers who building speech recognition tools.

Google is highlighting how it’s integrating Gemini and AI into its accessibility features to improve usability for users with low vision or hearing.

Google is expanding its existing TalkBack option with the ability for people to ask questions and get responses related to the image that they were sent or are viewing. That means the next time a friend texts you a photo of their new guitar, users can get a description of the image and ask follow-up questions about the make and color, or even what else is in the image.

Taking it a step further with AI, Google says users can not only get descriptions of the image, but also the entire screen they’re viewing. For instance, “if you’re shopping for the latest sales on your favorite shopping app, you can ask Gemini about the material of an item or if a discount is available,” the tech giant explained.

Google is launching a much-needed feature called Expressive Captions, which helps the listener gauge the mood and emotion of the video. The tech giant states that phones will be able to provide real-time captions for anything with sound across apps.

It uses AI to not only capture what someone says, but more importantly, how they say it. For instance, if you’re watching an intense game and the commentator is calling out an “amaaazing shot,” or when the video message is not “no” but a “nooooo.”

This AI-powered feature will be able to identify sounds that are dragging out words, to give you accurate captions. It can also spot when people are whistling or clearing their throats, making captions less redundant.

This new version of Expressive Captions is rolling out in English in the U.S., U.K., Canada, and Australia for devices running Android 15 and above.

Lastly, Google is taking steps to continue to make its digital platform more accessible and inclusive. It is giving developers access to its open-source repository from Project Euphonia (How AI improves products for people with impaired speech).

By opening up its resources, developers around the world will be able to personalize audio tools for research ,which could help train their models for diverse speech patterns, Google explained.

Post Comment